Overview

First, let’s make sure I don’t set expectations too high. Even though SQL Server 2016 and up has excellent support for JSON formatted data and even though Entity Framework Core’s provider system allows for providers to be created that work with document databases, the SQL Server provider does not yet have first class JSON support. Though lacking in integrated support, we can still work with JSON data in SQL Server using Entity Framework Core Value Conversion (new in EF Core 2.1).

As you’ll see below there isn’t much that needs to be done to support JSON formatted data in your relational object structure. This is due both to the value converters themselves as well as EF Core’s ability to smartly determine what logic can be translated for remote queries (SQL) and what will have to be done in memory (client evaluation). This is important because Entity Framework Core will not know how to translate queries for objects stored as JSON text to SQL and will have to use client evaluation.

This is directly contrasted to what is available in Entity Framework 6 which attempts to translate the full query for remote execution and will throw a runtime exception if its unable to do so. Developers have to be knowledgeable about which statements are supported and structure their queries accordingly which results in inelegant inclusions of .ToArray() or .ToList() embedded in their queries to split the remote and client evaluated portions.

If a developer wants to avoid client side evaluations, EF Core will by default log a warning that a client evaluation is needed so developers can restructure their data or queries. They can go a step further to treat those warnings as exceptions which would then make the behavior similar to EF 6 resulting in a runtime exception when EF Core would attempt to use client evaluation. A lot of thought has been put into making EF Core easy to use while providing access to information developers need to evaluate performance.

With all that said, let’s jump into Value Converters and how we can use them to serialize our objects as JSON formatted strings without jumping through hoops.

Getting Started

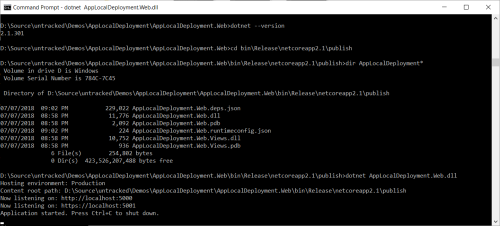

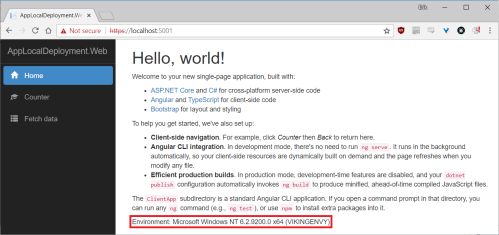

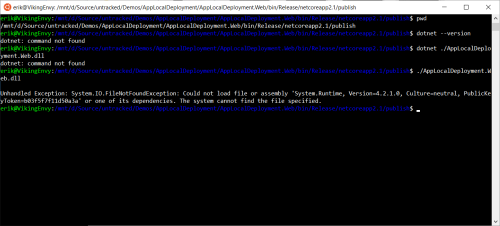

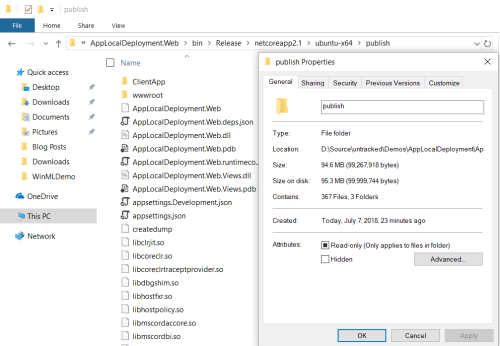

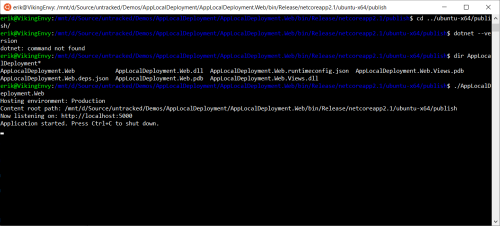

You will need an application that can use Entity Framework Core 2.1 in order to use value conversion. The type of application doesn’t matter otherwise. The code listings and log output in this post came from an ASP.NET Core 2.1 web application but this works just as well with console applications.

Setting up your models and context is done as normal for a code first solution until just before you generate creation scripts for your database or run the code for the first time against an existing database. For scaffolding a context from an existing database, some extra work is needed to replace the string properties mapped to the nvarchar(max) columns that hold JSON data with the classes that will map to the JSON data itself. There is no sample project to reference since the necessary code is small and easy to understand. I’ve included the code listings for the important classes instead.

Creating the Value Converter

There are generally two options for creating the value converter. Taking a look at the code listing for the DemoDbContext.cs class you will see both. The first option is a bit more readable in the context class in my opinion and is easily repeated for more properties should you want to have several properties serialized into JSON. It extracts the implementation logic to a separate class which, if you look at the code, is less readable than Option 2 but has the additional benefit of making it convenient to set a standard set of JSON serializer settings to be used on all the properties you want converted.

Option 2 has the benefit of being less work and less code overall while showing what conversion is taking place. Applying JSON serializer settings would only impact the readability a little bit and the code can be copied and pasted for any additional properties you want converted to JSON instead of being mapped to their own tables. Personally I prefer to use the separate class especially if I am going to use it for more than one property if for no other reason than the convenience of ensuring the same JSON serializer settings will be used on all the properties and it reduces duplicated implementation logic.

DemoDbContext.cs

using EFJsonValueConverter.Models.ValueConverters;

using Microsoft.EntityFrameworkCore;

using Newtonsoft.Json; //For option 2 below

namespace EFJsonValueConverter.Models

{

public class DemoDbContext : DbContext

{

public DemoDbContext(DbContextOptions<DemoDbContext> options)

: base(options)

{ }

public DbSet<Form> Forms { get; set; }

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

base.OnModelCreating(modelBuilder);

//Option 1: Using a value converter in a separate class

modelBuilder.Entity<Form>()

.Property(p => p.ResponseData)

.HasConversion(JsonValueConverter<ResponseData>.Default);

//Option 2: Putting the value conversion logic directly in DbContext

//With this method you do not need EFJsonValueConverter.cs at all.

modelBuilder.Entity<Form>()

.Property(p => p.ResponseData)

.HasConversion(

entity => JsonConvert.SerializeObject(entity),

value => JsonConvert.DeserializeObject<ResponseData>(value));

}

}

}

EFJsonValueConverter.cs

using Microsoft.EntityFrameworkCore.Storage.ValueConversion;

using Newtonsoft.Json;

namespace EFJsonValueConverter.Models.ValueConverters

{

public class JsonValueConverter<TEntity> : ValueConverter<TEntity, string>

{

public JsonValueConverter(JsonSerializerSettings serializerSettings = null,

ConverterMappingHints mappingHints = null)

: base(model => JsonConvert.SerializeObject(model, serializerSettings),

value => JsonConvert.DeserializeObject<TEntity>(value, serializerSettings),

mappingHints)

{

//No ctor body; everything is passed through the call to base()

}

public static ValueConverter Default { get; } =

new JsonValueConverter<TEntity>(null, null);

public static ValueConverterInfo DefaultInfo { get; } =

new ValueConverterInfo(typeof(TEntity),

typeof(string),

i => new JsonValueConverter<TEntity>(null, i.MappingHints));

}

}

Note that the DefaultInfo property is called for in the EF Core documentation but the Default property is not. That’s just a convenience property to use in the DB context class in the HasConversion(...) call and isn’t required. Just move the property body into the HasConversion(...) call instead.

Form.cs

using System;

using System.ComponentModel.DataAnnotations;

namespace EFJsonValueConverter.Models

{

public class Form

{

public long Id { get; set; }

[Required]

public bool IsDeleted { get; set; } = false;

[Required, StringLength(50)]

public string DisplayName { get; set; }

[Required]

public DateTimeOffset Created { get; set; }

[Required]

public DateTimeOffset LastModified { get; set; }

public int SchemaCompatabilityLevel { get; set; }

//Serialized as JSON string using HasConversion(...)

public ResponseData ResponseData { get; set; }

}

}

The properties in ResponseData.cs are not important here so I’ve omitted the code listing. The class just needs to conform to whatever functionality the JSON serializer can support.

Query Impact

As mentioned earlier, EF Core will not understand how to translate LINQ queries in your code to compatible SQL for properties that are converted to JSON. Even if the relational database you are using supports querying JSON directly, EF Core does not know that’s what you’re translating your object into; it just sees you’ve created a string. But since EF Core can handle client side evaluation automatically, it appears like it actually is making that leap. It’s important to remember this because in order for the query to be evaluated on the client, all the candidate records that need to be searched must first be retrieved and materialized. Only after that has happened can EF Core evaluate any queries using objects which are being converted to JSON for storage. If your primary query logic depends on the data inside the JSON object(s), performance will plummet as more data is added to the database.

One way to reduce the impact of client side evaluation would be to identify the properties you most query on and ensure they are in an object that maps to a table directly, promoting (or duplicating) them from the JSON object as necessary.

If that approach is not feasible, you could create custom stored procedures that leverage the JSON support in SQL Server and expose those through your context. That option requires you to know which properties are actually being serialized to JSON and know which stored procedures are the right ones to use. This method is a bit more work overall and could lead to unexpected results if the schema changes on the JSON data frequently. Understanding how SQL Server operates on JSON formatted data would be important if you plan to leverage this option.

To demonstrate the client side evaluation impact, let’s take a look at some queries.

var forms = _context.Forms.Where(f => f.ResponseData.IsRush);

In this example ResponseData is a property of the Form class and is being serialized to JSON via a value converter. Since ResponseData looks like a string to EF Core, it doesn’t know how to generate the SQL necessary to query for the property called IsRush directly. Instead it’s going to retrieve all the records in the Forms table and materialize them in memory. The value converter kicks in when the objects are being materialized and converts the JSON strings to the ResponseData objects. Once it has all the data it can then evaluate the query and return the records containing a true value for IsRush. Here is the log output for the query:

Microsoft.EntityFrameworkCore.Query:Warning: The LINQ expression

'where [f].ResponseData.IsRush' could not be translated and will be

evaluated locally.

Microsoft.EntityFrameworkCore.Database.Command:Information: Executed

DbCommand (31ms) [Parameters=[], CommandType='Text', CommandTimeout='30']

SELECT [f].[Id], [f].[Created], [f].[DisplayName], [f].[IsDeleted],

[f].[LastModified], [f].[ResponseData], [f].[SchemaCompatabilityLevel]

FROM [Forms] AS [f]

Notice the SQL query doesn’t have any mention of the IsRush property but if you ran this code against data you’d see as I did that the results were correctly filtered. Obviously this could be problematic if you have a lot of data. Bringing back your entire set of data to evaluate a property in memory is not a good idea. To mitigate this, limit the records in some other way to reduce the number coming back.

var forms = _context.Forms.Where(f => !f.IsDeleted && f.ResponseData.IsRush);

EF Core can translate this into two queries. First it will generate the SQL to bring back only forms which are not marked as deleted then query the result set in memory for those that need to be rushed. This approach works if the properties you’re primarily querying against are mapped to table columns and the JSON data only needs to queried to make a small refinement in the results.

Microsoft.EntityFrameworkCore.Query:Warning: The LINQ expression

'where [f].ResponseData.IsRush' could not be translated and will be

evaluated locally.

Microsoft.EntityFrameworkCore.Database.Command:Information: Executed

DbCommand (26ms) [Parameters=[], CommandType='Text', CommandTimeout='30']

SELECT [f].[Id], [f].[Created], [f].[DisplayName], [f].[IsDeleted],

[f].[LastModified], [f].[ResponseData], [f].[SchemaCompatabilityLevel]

FROM [Forms] AS [f]

WHERE [f].[IsDeleted] = 0

Another approach would be to look at the types of queries you’re doing. If looking for records that need to be rushed is a common activity, see if the IsRush property can be promoted to the containing class. In this example that would mean storing IsRush on the Form class instead of in ResponseData.

var forms = _context.Forms.Where(f => f.IsRush);

Now EF Core can generate the SQL directly and bring back only the records you want. If that option is not possible, creating a function or stored procedure you can call becomes a bit more effort but can enable you to make more complex queries for things you do often.

Microsoft.EntityFrameworkCore.Database.Command:Information: Executed

DbCommand (21ms) [Parameters=[], CommandType='Text', CommandTimeout='30']

SELECT [f].[Id], [f].[Created], [f].[DisplayName], [f].[IsDeleted],

[f].[LastModified], [f].[ResponseData], [f].[SchemaCompatabilityLevel],

[f].[IsRush]

FROM [Forms] AS [f]

WHERE [f].[IsRush] = 0

Wrapping Up

One overlooked aspect to what we’ve done above is that we don’t necessarily require SQL Server to support JSON. Entity Framework Core is doing all the serialization and deserialization and querying in the simplest use cases. Where SQL Server support comes in handy is if you find you absolutely must be able to query into the JSON data on the server. Adding stored procedures or functions which you can call through Entity Framework would open that possibility up while waiting for JSON querying support to land in a future version. As a cautionary note, I’m not sure if JSON support has been committed to for any particular release milestone but it’s because there has been such extensive discussion around the right way to achieve it that I’m sure it’s a matter of “when” and not “if” it will come.

So who should use this? First anyone who was using [NotMapped] properties to wrap Entity Framework entities to inject JSON serialization and deserialization would benefit from this cleaner approach. Anyone who has determined they have that need would find this approach simple and removes public properties that shouldn’t be accessed directly which support that conversion. Other developers who have an object that is expected to change frequently and don’t want to continuously alter their database schema could also benefit from this approach especially if the effort to introduce a separate document database is too high or not feasible for their environment. This approach should be used sparingly though; developers shouldn’t go looking for ways to use this if there is another acceptable way to achieve their goals.

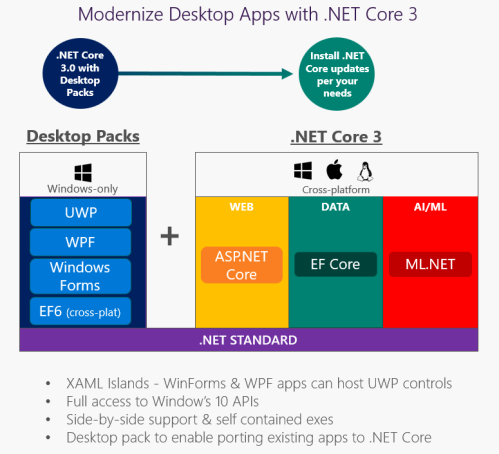

Entity Framework Core has come a long way in a short amount of time and the possibilities for developers continue to grow. Its design is flexible and extensible and the provider approach opens it up to new usage scenarios that were not possible previously. I am eagerly awaiting query support for JSON formatted data in the relational or SQL Server provider but in the meantime I know I can fall back on value converters and other features of EF Core to get the job done.